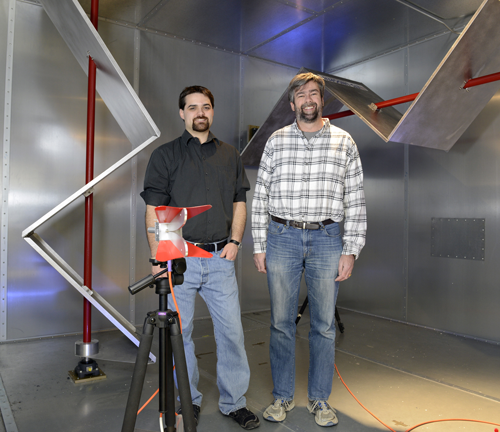

Jason Coder, left, and John Ladbury in the reverberation chamber used for studying interference between cell phones and various kinds of telecommunications equipment. The angled metal surfaces are ‘stirrers’ that are rotated in order to vary the direction and polarization of the signal. At front on the tripod is a microwave horn used in testing. Courtesy/NIST

Jason Coder, left, and John Ladbury in the reverberation chamber used for studying interference between cell phones and various kinds of telecommunications equipment. The angled metal surfaces are ‘stirrers’ that are rotated in order to vary the direction and polarization of the signal. At front on the tripod is a microwave horn used in testing. Courtesy/NIST

NIST News:

When your cell phone talks to your cable TV connection, the conversation can get ugly. In certain conditions, broadband 4G/LTE signals can cause significant interference with telecommunications equipment, resulting in problems ranging from occasional pixelated images to complete loss of connection to the cable provider.

That’s the conclusion of a suite of carefully controlled tests recently conducted by PML researchers Jason Coder and John Ladbury of PML’s Electromagnetics Division, in conjunction with CableLabs, an industry R&D consortium. The goal was to explore ways to quantify how 4G broadband signals interfere with cable modems, set-top boxes, and connections to and from them, and to suggest methods and identify issues of importance in defining a new set of standards for testing such devices.

The PML team found that, of the devices tested, nearly all set-top boxes and cable modems by themselves were able to handle 4G interference within the normal range of cell-phone transmission power. But retail two-way splitters performed poorly, and half of the consumer-grade coaxial cable products tested failed – that is, had substantial signal errors – at or below the normal power range.

“These days, everybody’s got a cell phone, and a vast number of them are 4G,” says Coder of the Radio-Frequency Fields Group. “It’s a common occurrence to check your phone or use your tablet while watching TV. This is a problem that cable companies and cell phone manufacturers hadn’t really considered in the past, when current standards were written. After all, a cable TV signal is completely conducted, and cell phones are entirely wireless. So interference between them didn’t seem to pose much of a threat. But now we have a situation where there are so many devices radiating either at the same time or in rapid succession that it starts to be noticeable to other devices.”

That situation is poised to get worse. The Federal Communications Commission will soon begin to auction off spectrum around 600 MHz. The principal operating frequencies of current 4G cell phones are in the range of 700 MHz to 800 MHz. But if cell phones move into the newly available lower frequencies, they will start to overlap the frequency range of many popular cable channels: 450 MHz to 650 MHz.

“More and more people,” says Ladbury, “are beginning to think that the current electromagnetic compatibility (EMC) standard needs revision.” That standard, IEC61000-4-3, specifies that devices should be tested in an anechoic chamber using a single frequency coming from a couple of different angles. “Back then,” he says, “a typical communications signal was much different. It was an AM signal, and they determined a test method suited to the analog cell phone standards of the day and the few wireless devices that there were. But now things have changed dramatically.”

The PML team conducted its tests at three frequencies – 627 MHz, 711 MHz, and 819 MHz – that are a representative sample of current and future overlap between cell and cable frequencies. Instead of an anechoic chamber, they employed a reverberation chamber in which large horizontal and vertical “stirrers” (see photo above) are rotated to expose the devices under test to radiation from numerous angles of incidence and polarization. “It’s a controlled space that provides a sort of worst-case environment where all the power that your cell phone transmits stays in the cavity and bounces around and eventually comes in contact with the cable modem or set-top box,” says Ladbury. “In many respects, it’s more like the real world than an anechoic chamber. But ultimately you need a variety of environments.”

For the purposes of this study, the researchers defined device failure as occurring when a TV picture became pixelated or a cable model accumulated a number of uncorrectable packet errors. But they raise the question of whether that is the right criterion, noting that one might alternatively define failure as occurring at the point at which the user notices a problem. (But even then, how much data degradation is “too much”?) The researchers, who are in the process of publishing their results, expect to raise these and other questions at conferences and in technical committees to provide a starting point for consideration of a new EMC standard.

Meanwhile, Ladbury says, improved consumer education and understanding could help minimize problems. “When the cable company installs cable in your house, they typically do it the right way and use quality components. The problem is that, once they leave, you have the freedom to change things – get a new TV, add a line splitter, run new cable, and so forth. And many consumers don’t have the knowledge to make the right decisions in those circumstances.

“And even if they do, adequate products may not be easily available. For example, we tested several retail-grade cable splitters and all of them were terrible. So for many people, the only option is to go to a trade-grade or professional-grade splitter. And they don’t sell those down at the mall.”

Similarly, cable quality and shielding play a major role in interference susceptibility. “But most consumers – and some companies – can’t easily discern differences in quality,” Coder says. “In Arizona recently, after a homebuilder installed coaxial lines in numerous homes, the cable company had to go door to door rewiring those houses because the quality of the cable was so low.

“Another thing most people aren’t aware of is the effect of a loose connector. We tested set-top boxes that manufacturers spent a lot of money designing. They had fantastic shielding, and top-quality cable attached. But we found that if you back off the connection threads even half a turn, the performance degrades substantially.”

Clearly, much more understanding is needed before, as Coder puts it, the emerging Internet of Everything becomes “the interference of everything.”